Incipia blog

3 Considerations for Managing ASO A/B Testing

The field of A/B testing (both the testing-first thought process and the actual statistic implementation of test management) has been a highly effective technique in the belts of digital marketers and has helped multitudes tune their assumptions, make continuous improvements and drive stronger positive outcomes.

Yet within A/B testing, there are different sub-sets, each with their own nuances. Managing paid and owned marketing A/B testing for example differs in some specific ways from A/B testing applied to earned marketing, such as app store optimization.

Augment your understanding of the different testing methods and challenges available in the field of App Store Optimization A/B testing strategy by reviewing the following set of considerations.

Consideration #1: The pros and cons of 6 ASO A/B testing alternatives

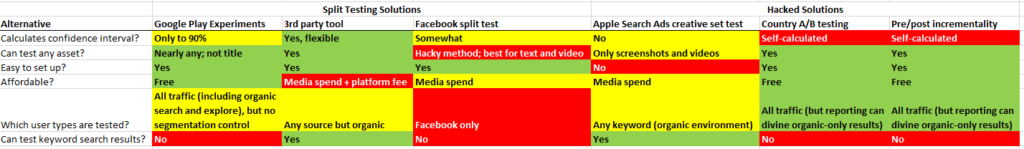

While there are several alternatives to doing ASO A/B testing, there is no one perfect A/B testing solution. Here are six of the most popular.

True A/B Testing

The following three methods enable randomized, true split-testing and confidence, which is necessary to grant valid test results.

- Google Play Experiments - this is the traditional A/B testing system, available through the Google Play Console. Pros include that it is free and that it provides the ability to test in the app store UX; cons include a lack of customization over testing parameters and the risk of a poor performer dragging down overall performance.

- 3rd Party Tools - such as Store Maven or SplitMetrics use a mobile web page mimic of the App/Play Store to study user click stream behavior between variants. Pros include customization of test parameters (including a search results test) and greater statistical configurations; cons include the premium expense of the platform and media fee and lack of ability to test organic traffic.

- Facebook split testing - using Facebook Ads for split testing is appealing as it can benefit both ASO and paid UA. Pros mostly include the high speed of testing; cons include the media fee, lack of ability to truly test in the app store UX and the lack of ability to test organic traffic.

"Hacked" A/B Testing

These methods are cheaper than the prior three and solve the issue of not testing on a true organic audience/store UX, but don't allow for true split testing and therefore complicate gathering valid results.

- Apple search ads creative set testing - this hack is a new method made possible by Apple's new creative sets. Pros include testing with true organic search traffic (down to an exact match keyword) and in the store UX; cons include lack of customization over testing parameters, required media fee and the inability to truly split-test.

- Country A/B testing - this hack refers to testing one variant in one country and another variant in another country (or using a holdout country) and comparing the outcomes of each country. Pros include that it is free and allows testing of organic traffic and in the app store UX; cons include nearly everything else.

- Pre/post incrementality - this hack, also referred to as "sequential testing," is a very simple method wherein the tester measures the change in one country for the same period of time pre-and-post change. Pros include that it is free and allows testing of organic traffic and in the app store UX; cons include lack of control, validation, and the risk of a poor performer dragging down overall performance.

Once more, there is no "best of all" testing method. Different methods may be most optimal for different use cases, and it is will benefit you to consider how each fits your particular case, budget, and risk tolerance and desired confidence, as well as the pros and cons of each method.

Consideration #2: The inability to segment Google Play Experiments may smother real outcomes

Google Play Experiments is the reigning best-case method; yet there is a potential downside to using this method lurking in the details.

One of the most vexing parts of managing A/B testing via Google Play Experiments is the frustrating lack of significant results that befall the vast majority of Play Store tests. Oftentimes, the results of tests end up between negative 1-3% and positive 1-3%, netting out at no real change. While A/B testing is certainly a numbers game where most tests will either fail to improve the conversion rate or fail to become significant, the consistency with which we saw no change got us thinking about why this so often seemed to be the case, despite having significant variations in what we were testing.

After Google released the organic insights treasure trove of search term data we came across hard data that spurred a hypothesis as to why Google Play Experiments failed to drive significant results, and why it may not be the best option for exploratory/innovative A/B testing.

For many apps the share of downloads from branded searches vs non-branded keywords tilts far towards branded keywords. This makes sense considering that branded search traffic is the result of a company's years and millions invested into marketing and brand building.

It also makes sense that branded searchers also have the most specific intent to download one particular app (that they are searching for), and so we can assume that they are highly unlikely to be influenced by one store design vs another, if they both designs convey the brand well enough. Given this, our hypothesis came to be that the branded sub-segment of store traffic, if large enough, can be an independent cause of diluting results enough to the point of leading to non-significant outcomes.

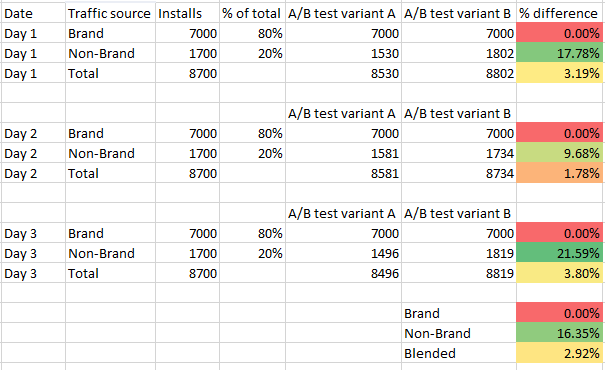

The example below offers a simple illustration of this concept of branded inertia smothering what would otherwise be a big A/B testing win. In the data, branded traffic represents 80% of the total share of downloads, with non-branded traffic representing the remainder 20%. If branded traffic experiences no change in conversion rate between the two variants, then a theoretical average daily lift in non-branded conversion rate of +~16% is reduced to +~3% after branded dilution, which may not be enough to be labeled statistically significant or worth applying.

It's a matter of segmentation at this point.

Granted, the example above doesn't include the fact that all traffic (including inorganic traffic) is included in Google Play Experiments, too. In this case whether or not the additional traffic would further dilute the results or strengthen the results would depend on the degree to which the other traffic is primed to download. If the traffic comes from a lookalike 1% Facebook ad set for example, the store design may not really affect the user's decision and hence would further dilute the test. If the traffic comes from a top chart browsing user, then the store design may play more of a role in influencing the user to download or not, hence the results would become stronger.

Google Play Experiments don't allow for segmentation control, or even segmented reporting, leaving us with no way to confirm whether dilution may or may not be occurring.

This issue of the mismatch in intent between inorganic and organic user traffic is also one of the main gripes of using 3rd party A/B testing tools.

This is not to say that you should ignore the effects of your changes on branded or inorganic users, or to take their trends for granted regardless of what choice of assets you decide on. And the devil's advocate point of view can be successfully argued that only the strongest tests should survive: those which can overcome the inertia of brand and express their dominance consistently in the results. Darwinian concepts remain characteristically black and white and appealing for their effective simplicity, everywhere from nature, to startups, to marketing.

The main point is that if you want to be more results-driven and precise when innovating on your store listing assets, you may want to consider alternatives for measuring test effects on conversion rate for sub-segments (e.g. brand vs non-brand or non-brand organic vs inorganic), as opposed to testing the all-in-one effect.

Consideration #3: Consider the need for certainty and risk-taking

In the example of consideration #2, you may have developed an immediate opinion about whether a 3% all-up gain is worth pursuing or not, regardless of the potential double-digit non-branded improvements.

Let's stop for a moment and suppose you did apply that test and realized marginal gains, if any. While an unfortunate short-term result, consider the fact that app store algorithms are highly trend-oriented, and that over time, your keyword score would improve for those non-branded keywords, and eventually your positions could flip from 10 to 9, 9 to 8, and so on. In this case a larger all-up gain may begin to materialize as your new store assets convince more people to download, and the algorithms in response reward you with more organic visibility and so on, ultimately converting a short-term win of 3% into something much larger.

We could just as easily also suppose that you didn't apply that test, seeking certainty that your results would overcome noise and become worth their 5 minutes of fame in the upcoming QBU. In this case you might continue testing and never come up with anything worth applying, skipping out on a potential 16%+ non-branded gain and the subsequent self-virtuous algorithmic cycle that would accompany it.

Not all risks are worth taking, but sometimes you need to do something to shake things up and make progress. Tests won't give you the magic answer to everything. In the end they should remain a tool in your toolkit rather than serving as the Oracle at Delphi.

Final Consideration: Bayesian vs null hypothesis A/B testing

Lastly, there is growing support for an alternative type of A/B testing method, which has the potential to overtake the traditional null hypothesis testing.

The alternative test is derived from Bayesian statistics. In TLDR business speak, Bayesian statistics make use of probabilistic inferences in the test to arrive at conclusions, and (in some cases) can produce conclusions with less data required than a traditional null hypothesis A/B test. It's also generally easier to interpret than traditional null hypothesis test, and thus can lead to better-informed decisions.

Some A/B testing systems (like Store Maven) make use of Bayesian statistics. Watch for this type of testing to rise in usage. Check out these posts for further reading on the subject of Bayesian testing:

- Bayesian Bandits testing for mobile apps @ Mobile Dev Memo via Eric Seufert

- It's Time to re-think A/B testing @ Mobile Dev Memo via Viktor Gregor

Also thanks to Luca Giacomel, a wealth of ASO knowledge and introducing the concept of Bayesian statistics to us!

That’s all for today! Thanks for reading and stay tuned for more posts breaking down mobile marketing concepts.

Be sure to bookmark our blog, sign up to our email newsletter for new post updates and reach out if you're interested in working with us to optimize your app's ASO or mobile marketing strategy.

Incipia is a mobile marketing consultancy that markets apps for companies, with a specialty in mobile advertising, business intelligence, and ASO. For post topics, feedback or business inquiries please contact us, or send an inquiry to projects@incipia.co

Categories

Tags:

- A/B testing

- adjust

- advertising

- adwords

- agile

- analytics

- android development

- app analytics

- app annie

- app development

- app marketing

- app promotion

- app review

- app store

- app store algorithm update

- app store optimization

- app store search ads

- appboy

- apple

- apple search ads

- appsee

- appsflyer

- apptamin

- apptweak

- aso

- aso tools

- attribution

- client management

- coming soon

- design

- development

- facebook ads

- firebase

- google play

- google play algorithm update

- google play aso

- google play console

- google play optimization

- google play store

- google play store aso

- google play store optimization

- google uac

- google universal campaigns

- idfa

- ios

- ios 11

- ios 11 aso

- ios 14

- ios development

- iot

- itunes connect

- limit ad tracking

- ltv

- mobiel marketing

- mobile action

- mobile analytics

- mobile marketing

- monetization

- mvp

- play store

- promoted iap

- promoted in app purchases

- push notifications

- SDKs

- search ads

- SEO

- skadnetwork

- splitmetrics

- startups

- swift

- tiktok

- uac

- universal app campaigns

- universal campaigns

- user retention

- ux

- ux design