Incipia blog

5 Pro A/B Testing Tips

Google Play's Experiments engine is hands down one of the most powerful and useful tools in the belt of an ASO (who has only an Android app, or both an Android and iOS app). Yet, running Google Play's A/B tests can also be a very frustrating experience when your test results show a winner, but actual results don't show any tangible improvement.

This post is a collation of the top 5 tips from team Incipia for running A/B tests, plus additional advice from ASO expert at 8fit, Thomas Petit.

For a more in-depth breakdown of how to run successful CRO efforts, check out our Advanced ASO book (Chapter 6: Increasing Conversion).

A/B Testing Tip #1: Realize what Google Play's 90% confidence interval really means

As pointed out in Thomas's slideshare, a 90% confidence interval is actually not extremely confident and can lead to wild swings in test results, or tests that don't produce real gains.

So what does a 90% confidence interval of 3% to 15% mean? It does not mean that the likely outcome is a 9% improvement in installs. What it means is that:

If you run the experiment 100 times, 90 times the outcome would be between 3% and 15%

Kudos to Luca Giacomel for correcting this statement :)

Knowing this should encourage you not to apply any tests which do not have a high lower limit expected outcome. Internally, we like to pursue tests that are at least 5-10% on the lower limit (and which also follow the other best practices below).

A/B Testing Tip #2: Give tests enough data to increase confidence

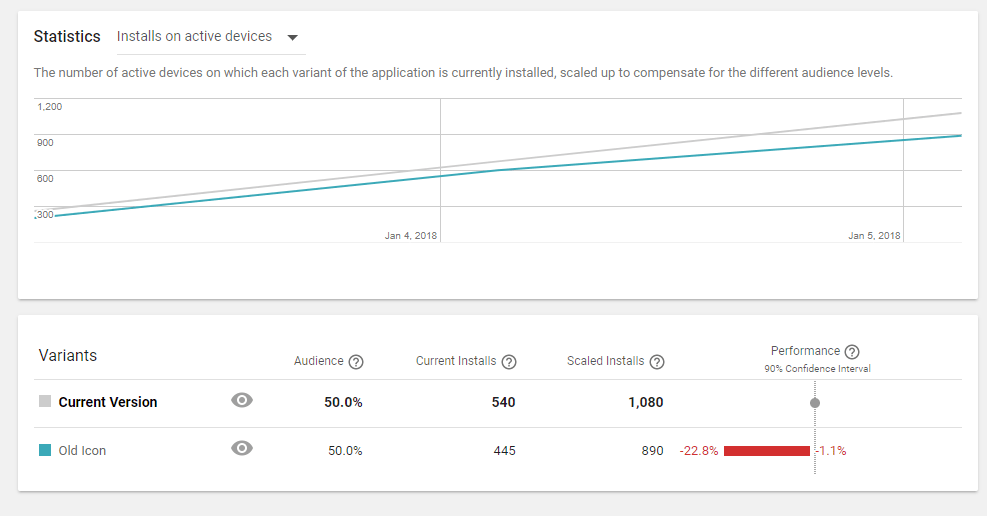

It's important to realize that the confidence interval Google displays is extrapolated from the current installs of each test variant. This means that if your app has a low amount of traffic flowing to each test, the real difference in performance between variants may be very small (as small as a few installs), and thus the extrapolation of that data more susceptible to a wide margin of error. In other words: your test may show a result that you may not realize by applying the result.

Here are a few actions you can take to improve the confidence in your tests:

- Apply tests that are not declared a winner at your own peril.

- While as mentioned above a 90% confidence interval is not the best, it is still based on methods of calculating statistically significant data, and is most likely better than deciding yourself whether or not the performance is good enough. If a test has not yet been declared a winner, there's a good chance that your results will be nominal gains, or even negative.

- Run tests that gather at least 3-7 days of data, or earn a good number of current installs (1,000+) per variant.

- A/B tests can be subject to sampling bias, especially if you are running the full 3 unique variants and if your install volume is low. While Google should be selecting users at random for each test, it's still possible that users from keywords or other sources that convert naturally higher or lower may be sent to one variant; this means that the variant's performance will be skewed higher or lower, by no means of the variant's performance itself. Because of this, you should also not apply tests that show a winner after <3 days.

- Run fewer variants simultaneously or turn up your UA efforts for faster results.

- Watch tests over time to see whether the performance trend is consistent.

- Seeing a variant that "holds the lead" for several days straight can be an indicator that the results are consistent across multiple different groups of randomly selected users, which is a good sign.

A/B Testing Tip #3: Run a B/A test

Another tip from Thomas is to run a "B/A test," which can confirm whether the results of your test are consistent once fully applied. The "B" means to set the variant from the prior test which produced good results as the "A" (or control) of the next test. This helps you determine, from two separate tests, whether the same variant was select as the winner in each. You may be surprised at how many B/A tests fail to select the same variant. If this is the case, your best bet may be to continue testing new variants.

A/B Testing Tip #4: Measure "Real Gains" from your test

In addition to or in place of running a B/A test, you should also measure the results of a test after applying it, by comparing your conversion rate in the period before the test was run to the period afterward. Be sure to measure results from the specific country that you ran the test in, and also be sure to measure the conversion rate before the test began; this is because the test could have affected your conversion rate while running. Usually 1 week is a good preliminary period of time, but several weeks will help you understand the trends better than 1 week.

Be aware that user acquisition data will not be fully updated for days or even weeks after the original date.

A/B Testing Tip #5: End poor tests after 3 days

Going against the logic of running tests for a longer period of time is the idea of ending tests which produce significant negative results for 3 consecutive days (or even 2, but not a single day), even if a winner is not yet declared. This is because A/B testing has repercussions on your app's real performance, meaning that poor variants will have a real, negative impact on your app's overall ASO health, which is the opposite of the goal of A/B testing. While it is possible, it is very unlikely that a test which has a big, negative performance trend will recover and outperform to a big degree, especially if your test has high traffic and fewer variants.

For a more in-depth breakdown of how to run successful CRO efforts, check out our Advanced ASO book (Chapter 6: Increasing Conversion).

That's all for now, folks! Be sure to bookmark our blog, sign up to our email newsletter for new post updates and reach out if you're interested in working with us to optimize your app's ASO or mobile marketing strategy.

Incipia is a mobile app development and marketing agency that builds and markets apps for companies, with a specialty in high-quality, stable app development and keyword-based marketing strategy, such as App Store Optimization and Apple Search Ads. For post topics, feedback or business inquiries please contact us, or send an inquiry to hello@incipia.co.

Categories

Tags:

- A/B testing

- adjust

- advertising

- adwords

- agile

- analytics

- android development

- app analytics

- app annie

- app development

- app marketing

- app promotion

- app review

- app store

- app store algorithm update

- app store optimization

- app store search ads

- appboy

- apple

- apple search ads

- appsee

- appsflyer

- apptamin

- apptweak

- aso

- aso tools

- attribution

- client management

- coming soon

- design

- development

- facebook ads

- firebase

- google play

- google play algorithm update

- google play aso

- google play console

- google play optimization

- google play store

- google play store aso

- google play store optimization

- google uac

- google universal campaigns

- idfa

- ios

- ios 11

- ios 11 aso

- ios 14

- ios development

- iot

- itunes connect

- limit ad tracking

- ltv

- mobiel marketing

- mobile action

- mobile analytics

- mobile marketing

- monetization

- mvp

- play store

- promoted iap

- promoted in app purchases

- push notifications

- SDKs

- search ads

- SEO

- skadnetwork

- splitmetrics

- startups

- swift

- tiktok

- uac

- universal app campaigns

- universal campaigns

- user retention

- ux

- ux design